The Game and the Experiment

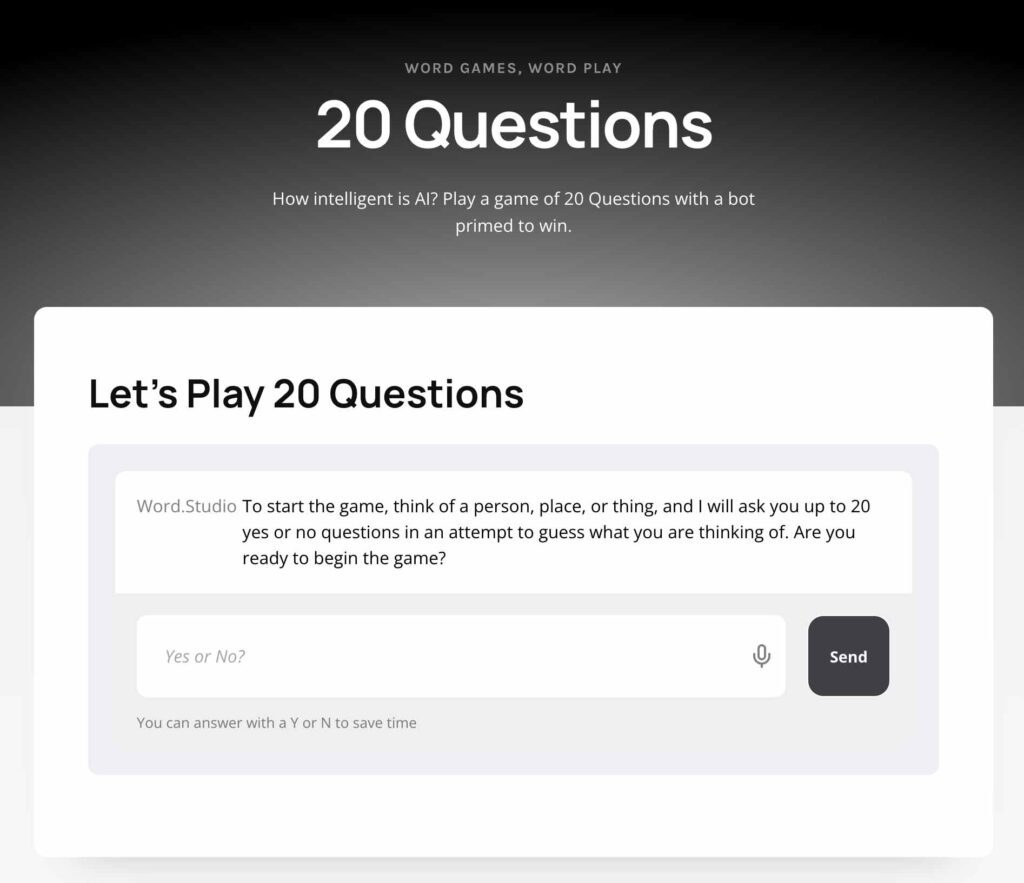

We recently started experimenting with building AI chat-based word and role-playing games. One of the first ideas we tested in 2023 was the classic guessing game, 20 Questions. Most people are familiar with the rules: One player thinks of an object, and the other player can ask up to 20 yes-or-no questions to figure out what it is. We built a chat-based version where AI can play by asking questions to guess what you’re thinking. It worked well, but far from perfect. Playing with it can be interesting but sometimes frustrating. AI can occasionally make wildly good guesses. But it often struggles with basic game rules and consistency. It might lose track of how many questions have been asked, ask redundant questions, or give answers that make you suspect it’s “cheating”. It turns out this simple game can reveal a lot about what current AI can and can’t do.

If you want to check it out, you can play with our 20 Question AI game here (You’ll need a Word Studio subscription to fully play) or try our custom GPT we made available on ChatGPT for free on the GPT Store.

Does It Actually Work?

It’s complicated. The tool works by using our custom instructions combined with the ChatGPT 4o model (at the time of this writing). Modern AI models like ChatGPT, Gemini, Grok or Claude are all built on large language models (LLMs), which generate text by statistically predicting likely sequences of words. This may be an oversimplification, they can also output images, video, and code, but the important part is that they were not specifically trained to play 20 Questions. They are general-purpose chatbots.

We built our 20 questions game with custom instructions sent along with player responses to an LLM. Any ability and LLM has to play games, without access to tools or outside memory, is more of a lucky side effect of learning from so much text. The fact that it can play any word games at all is an unintended side effect of the model pre-training.

Early Game-playing AI’s were Built Differently

Before large language models, most game-playing AI systems were built to master a specific game. Each one designed to excel at a very specific task. Deep Blue, the computer that beat world chess champion Garry Kasparov in 1997, didn’t “understand” chess. It calculated millions of moves very fast and followed built-in programming.

Other popular AI systems followed the same pattern. IBM’s Watson, which won Jeopardy! in 2011, could scan huge databases and match clues to likely answers. But it couldn’t hold a conversation or play any other games. AlphaGo, famous for its brilliant Go moves, learned by playing millions of games against itself. It was amazing at Go but useless at anything else. Poker bots like Libratus in 2017 relied on mathematical models of bluffing and risk.

The Original 20Q AI

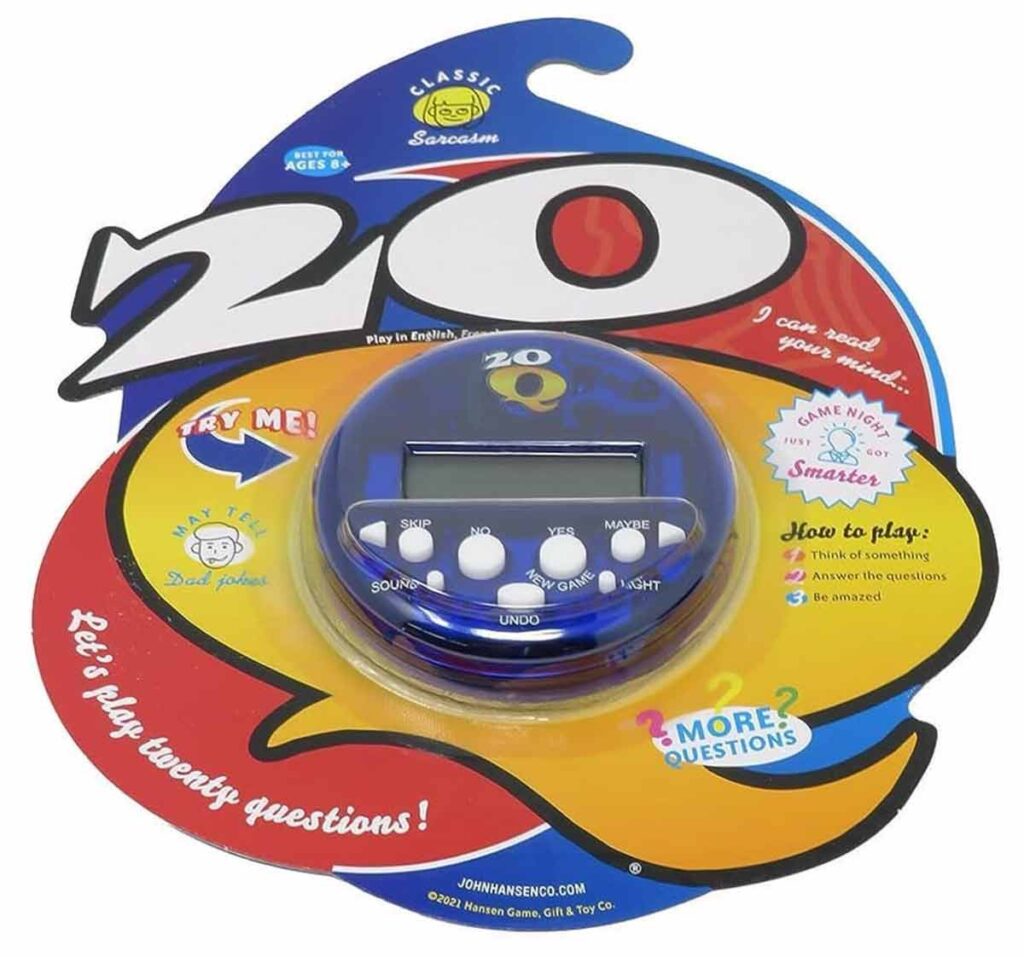

Specialized programs have been built to excel 20 questions using built-in programming. More than a decade ago an electronic toy called 20Q became famous for seemingly reading people’s minds. You think of any common object, and this little electronic gadget asks you a series of questions, then almost always guesses correctly.

The 20Q device was so good it could get the right answer about 80% of the time within 20 questions, and 98% of the time if you allowed it 5 extra questions. That’s better than most humans! How did it work? It uses a custom neural network that was trained specifically on the 20 Questions task.

The inventor of 20Q, Robin Burgener, started the project in the 1980s and let it learn by playing against thousands of people. Over the years, the system essentially built up a massive knowledge base of 10,000 possible objects and the typical yes/no answers associated with them. Unlike a human (or an LLM), the 20Q AI doesn’t think in words or sentences – it thinks in probabilities. With each answer you give, it updates a probability score for every object in its database, narrowing down the field. It will then choose the next question specifically to split the remaining possibilities as efficiently as possible. In essence, 20Q’s strategy was baked into its design. It was engineered to pick the most informative question at each step.

Back in 2006, ScienceLine posted an article exploring the workings of his game, and gave an early peek into the implications of AI across many types of tasks.

“This capability is not only what makes the game so eerily good at mind-reading, it’s also what excites Burgener about potential future applications for the AI, from tech support to medical triage. In any situation when someone could misunderstand a question or inadvertently answer incorrectly, the 20Q AI could approximate a human trained in recognizing those types of errors. Like a triage nurse, 20Q could theoretically learn how to accurately diagnose ailments by asking the right questions. Shrink this database into a handheld device like the game, and you’ve got a powerful tool for an emergency situation.”- Karen Schrok, ScienceLine.

Here is an interview with Mr. Burgener in mid 2018, before the popularization of large language models:

Because of this approach, the 20Q toy can ask some unusual questions that a human might not think of. It might appear to go on a tangent, but behind the scenes it knows why it’s asking. Burgener noted that “our strategy (as humans) tends to be get a vague idea and then focus in on one object… The 20Q AI, however, can consider every single object it knows simultaneously”, updating all of them at once with each answer. In other words, it doesn’t get stuck on one hypothesis too early the way people do.

It even handles wrong or misleading answers gracefully. Because it’s not following a strict binary decision tree, a mistaken answer doesn’t completely throw it off. The device will eventually notice if your answers are contradictory (say, if you accidentally indicated your “horse” was a vegetable on question one) and it will adapt by asking a question to clarify, effectively correcting course. This robustness and single-minded focus on the game logic is something a general large language models like ChatGPT lacks.

Another popular example is Akinator, a web-based game where a cartoon genie guesses which real or fictional character you’re thinking of. Akinator uses a vast database of characters and traits, and a set of cleverly pre-designed questions. It’s employing an expert-system style approach (with a bit of machine learning) to narrow down the character, and it’s remarkably successful within its domain.

These systems show that with the right structured knowledge and algorithms, 20 Questions isn’t an impossible task for a computer at all. A well-crafted narrow AI can outperform humans easily. But those systems are purpose-built for 20 Questions, whereas large language models, like ChatGPT, are generalists trying to play a specialist’s game.

Playing 20 Questions with a Large Language Models.

Models like GPT-4 or Claude weren’t trained to win at anything specific. Instead, they learned to predict the next word in sentences drawn from the internet, books, and more. They absorbed, with machine learning, vast knowledge bases. They learned facts, patterns, even strategies for games they were never explicitly taught.

Because of this, they can talk about almost anything, including games like 20 Questions. They can ask smart questions, guess answers, or simulate how a game might unfold. But there is a big difference: They’re not rule-followers or planners. They’re more like improv actors, responding in real time based on what sounds plausible based on the words given in the instructions (The prompt).

This gives them range. They can hold their own in a game of 20 Questions, up to a point. They can ask repeat questions, ask over 21 questions, and make plenty of mistakes. If they are the player that is “thinking” of the thing. It gets weird. You wonder if it is telling the truth when it claims you guesses it’s supposed secret word on your first try.

They don’t have the internal structure that older game AIs relied on. 20 Questions is simple on the surface. If you asked GPT-4 or Claude 3.5 to play poker against a world champion, it would need some help.

The help might come in the form of added tools: a chess engine to analyze positions, a game simulator, or extra memory systems that help it plan more carefully. Researchers are experimenting with ways to give language models this kind of scaffolding. But today’s general-purpose AIs are still improvisers. They’re broad and surprisingly capable, but when it comes to playing serious, rule-heavy games, they’re still learning the ropes.

When AI Asks the Questions

Playing the question-asking side of 20 Questions requires a mix of broad knowledge and clever strategy. A human “guesser” will usually start with big dividing questions (Is it animal, vegetable, or mineral?) to narrow down the field, and then zoom in on the details. This divide-and-conquer strategy is known to be optimal: each question should roughly cut the remaining possibilities in half. The trouble is, a vanilla AI like ChatGPT doesn’t automatically apply this strategy – it doesn’t really know it’s playing a narrowing-down game unless you prompt it to. By default, it generates questions that sound reasonable (often common questions it has seen in training data), but not necessarily the most strategic ones.

People experimenting with playing 20 Questions with LLMs found that the models (especially earlier versions like GPT-3.5) will sometimes meander or ask oddly specific questions too early, instead of eliminating big categories. It also has a tendency to waste turns by repeating or confirming information it already knows. In one analysis by Evan Pu, GPT-3.5 played hundreds of games of 20 Questions with itself and managed to guess the right answer in only 68 out of 1823 games. That’s a success rate of under 4%. (In many cases it ran out of its 20 questions without narrowing things down effectively.) By contrast, an average human player with a sound strategy can do quite well at this game. Humans intuitively know how to ask high-value questions, whereas a general LLM has to discover or be told that strategy in the course of the conversation.

“…there are plenty instances of the guesser asking question which the answer would’ve been already obvious, such as asking if a non-mammal would have fur, or asking about “hard plastic or metal” again, despite having it answered previously already. It is quite possible this behaviour can be curtailed with better prompting, maybe with self-reflection or similar techniques.” – Evan Pu. (2023). LLM self-play on 20 Questions

Why does the AI struggle here? A big reason is that logical planning and remembering constraints are not its forte. An LLM is good at producing fluent answers on open-ended topics, but a focused puzzle like 20 Questions highlights its weakness in reasoning. It doesn’t truly plan ahead. it just generates one question at a time based on probabilities. Without an internal strategy the AI’s questions can seem almost random or at least inefficient. It lacks the algorithmic search that a dedicated game-playing program might have.

Another limitation is the AI’s working memory. ChatGPT has a fixed context window (a few thousand tokens of recent conversation), which means it can only hold so much information at once. In a long game of 20 Questions, the model might start forgetting details from earlier or lose track of which question number it’s on if not explicitly reminded.

It’s strange that these incredibly advanced language models are bad at math and counting. You would think a computer, especially an “intelligent” one, could handle keeping an accurate count of a few questions. An LLM by itself has no built-in counter or long-term strategy unless we manually feed those back into the chatbot prompt each time. Models like ChatGPT-4 and Claude 3.5 operate in a single “forward pass” without iterative reasoning, which makes it hard for them to handle tasks requiring many steps of deduction. This may be a short lived phenomenon as AI models continue to advance. Many AI model providers are starting to build in multi-step reasoning and external tool access. Imagine that the AI has access to a separate place to store notes, a good calculator, etc.

For now, the AI is improvising the game rather than logically solving it. So it’s not too surprising that it fails epically at times, asking too many questions or missing obvious details that a human would catch.

There is a hack: if you give AI instructions how to play properly, it can follow those instructions to some extent. For example, telling it up front how to divide the search space. You could say something like:

“Think of each question as halving the possibilities…” may improve its performance. This would encourage it to “simulate” the built-in strategy used by the classic 20Q toy game mentioned earlier.

This kind of on-the-fly instruction compensates for its weaknesses in strategy, counting abilities, and limited memory. Still, without explicit training or instructions, even the best language model today is no match for a the classic purpose-built game, or even a skilled human in this guessing game.

As of the time of this writing, the Word Studio’s 20 Questions Game is running with our custom instructions paired with OpenAI’s GPT 4o. And the game has been designed for the player to hold the secret and the AI to ask the questions. If you try to play the other way around, the game is just not fun because there are too many inconsistencies.

When AI Holds the Secret

What if the AI thinks of an object and the human asks the yes/no questions? You’d think this would be easy for the AI. It just has to choose something and truthfully answer the questions about it. There’s no trickery required, just consistency. But role can actually trip up an AI model in many ways, and many players have grown skeptical of whether the AI is really playing fair.

The challenge is that an LLM doesn’t have a true “mind” where it holds a secret thought. It only has the conversation context. When we say, “Think of an object and don’t tell me,” maintaining that hidden object as a fixed reference throughout 20 questions is hard for a system that essentially generates each answer from scratch based on prior text. The AI might inadvertently change what it’s thinking of or interpret questions inconsistently over the course of the game.This can lead to contradictory answers. For example, in one self-play experiment an AI chose “abacus” as the secret object – but when asked “Does the object produce sound when in use?” it answered “No.” Anyone who’s played with an abacus knows it does make clacking sounds, so that answer was incorrect. The AI wasn’t trying to lie; it probably didn’t have a reliable internal model of an abacus’s properties, or it reasoned in some flawed way. Models are trained using text that is found in books, articles, internet forums, research papers, and other writings from history to the present. It may be that the noise that an abacus make isn’t written about often, so the chances it would associate sound with an abacus go down. Small mistakes like that can completely throw off the human guesser.

In this interview by The Cosmos Institute, AI scientist Murray Shanahan of Imperial College of London touches briefly on the 20 questions “secret” problem (Around the 11 minute mark):

“As you can imagine, that induces this whole tree of—of possible conversations, you know? So what the language model actually generates is a probability distribution over all the possible words. And then what actually comes out is, uh, you sample from that probability distribution. It’s absolutely inherent in the way large language models are built, that it’s not going to commit at the beginning of the conversation to exactly what the object, uh, is. So at the beginning of the conversation, all of these possibilities still exist, and they still continue to exist all along. So even though it should have committed to a particular object, it never really has.” – Murray Shanahan.

Murray wrote this research paper on AI role-playing in 2023. It includes some observations specifically about the game of 20 Questions. If you want to go deeper, it’s worth checking out.

Such slip-ups understandably make people suspect the AI is not being truthful. If the AI’s answers don’t line up with any real object, you start to wonder if it even picked something in the first place. The truth is, unless carefully guided, a language model might effectively be “deciding” on an object on the fly as it goes, ensuring its answers fit whatever questions have been asked so far. From the outside, this looks like cheating (never revealing until you ask, or giving up too easily and letting you win). The AI could theoretically dodge losing by never actually committing to a single answer until the end. We don’t think the AI is intentionally cheating, and it has no intent at all, but this behavior is a side effect of the way it works. The weights given to the associations between words. It has no built-in rule enforcing consistency with this secret person, place or thing. Maintaining consistency over many turns (what one researcher called “internal consistency” or an inception-like loop for the model) is genuinely difficult for current AIs.

Another issue is that the AI might misunderstand a question or have gaps in its world knowledge, leading to a wrong yes/no that misleads the questioner. Unlike a human answerer, it doesn’t have common sense validation of “Oops, if I say yes to that, does it still make sense given my object?” It just computes an answer that seems statistically appropriate.

As mentioned before, the AI isn’t great at counting turns. It might not notice that the 20th question has passed. We’ve seen cases where an AI continued answering into question 21 and beyond, oblivious to the limit, until the human pointed it out. All these technical limitations mean that playing the answerer role fairly and flawlessly is currently a tall order for a general-purpose AI.

From a user perspective, these inconsistencies break the illusion of a fair game. People might joke that the AI “changes the answer at the end” or isn’t really thinking of anything at all. In our experiments, we’ve had to put constraints and reminders in the AI’s prompt to try to keep it honest: for instance, explicitly telling it, “You have chosen an object, now keep your answers consistent with that choice.” Even so, absolute trust is hard to come by – one weird answer and the magic is gone.

Fun, Insightful, and Still Improving

Our forays into 20 Questions with AI have been both entertaining and insightful. On the one hand, it’s amazing that you can have a chatbot play this game at all just by giving it the instruction. Sometimes the AI really will impress you by guessing your obscure object at question 17, or by answering every question about its secret item without slipping up. Those moments feel almost magical. But just as often, the cracks show: the AI asks something nonsensical, or it forgets a clue you gave it, or it confidently answers “No” to “Is it a book?” only to later reveal the answer was in fact a book. It’s a bit like playing with someone who has a very poor memory or a child who might impulsively change the game rules; you have to cut it some slack or the game falls apart. The current LLM simply doesn’t have an intrinsic concept of “fair play” or a built-in commitment to a hidden truth the way a person does. These failures can be frustrating for players and highlight the difference between genuine reasoning and the AI’s imitation of reasoning. In a conversational, low-stakes setting, these hiccups are usually harmless and even funny.

Newer models like GPT-4o already exhibit more coherent strategy and fewer blunders, suggesting that scale and refined training do yield emergent improvements in tasks like this. There is even research interest in using games like 20 Questions as benchmarks for an AI’s consistency and problem-solving skills. Each iteration of these models brings us a little closer to AI that can handle multi-turn reasoning tasks with more human-like accuracy.

For now, playing 20 Questions with AI is best approached in the spirit of exploration. It’s a neat way to test an AI’s limits and see how it responds when pushed into a corner of logic. Just don’t bet on it beating you in a fair match every time.